Face and Facial Keypoint detection¶

After you've trained a neural network to detect facial keypoints, you can then apply this network to any image that includes faces. The neural network expects a Tensor of a certain size as input and, so, to detect any face, you'll first have to do some pre-processing.

- Detect all the faces in an image using a face detector (we'll be using a Haar Cascade detector in this notebook).

- Pre-process those face images so that they are grayscale, and transformed to a Tensor of the input size that your net expects. This step will be similar to the

data_transformyou created and applied in Notebook 2, whose job was tp rescale, normalize, and turn any iimage into a Tensor to be accepted as input to your CNN. - Use your trained model to detect facial keypoints on the image.

In the next python cell we load in required libraries for this section of the project.

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

%matplotlib inline

Select an image¶

Select an image to perform facial keypoint detection on; you can select any image of faces in the images/ directory.

import cv2

# load in color image for face detection

image = cv2.imread('images/obamas.jpg')

# switch red and blue color channels

# --> by default OpenCV assumes BLUE comes first, not RED as in many images

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# plot the image

fig = plt.figure(figsize=(9,9))

plt.imshow(image)

Detect all faces in an image¶

Next, you'll use one of OpenCV's pre-trained Haar Cascade classifiers, all of which can be found in the detector_architectures/ directory, to find any faces in your selected image.

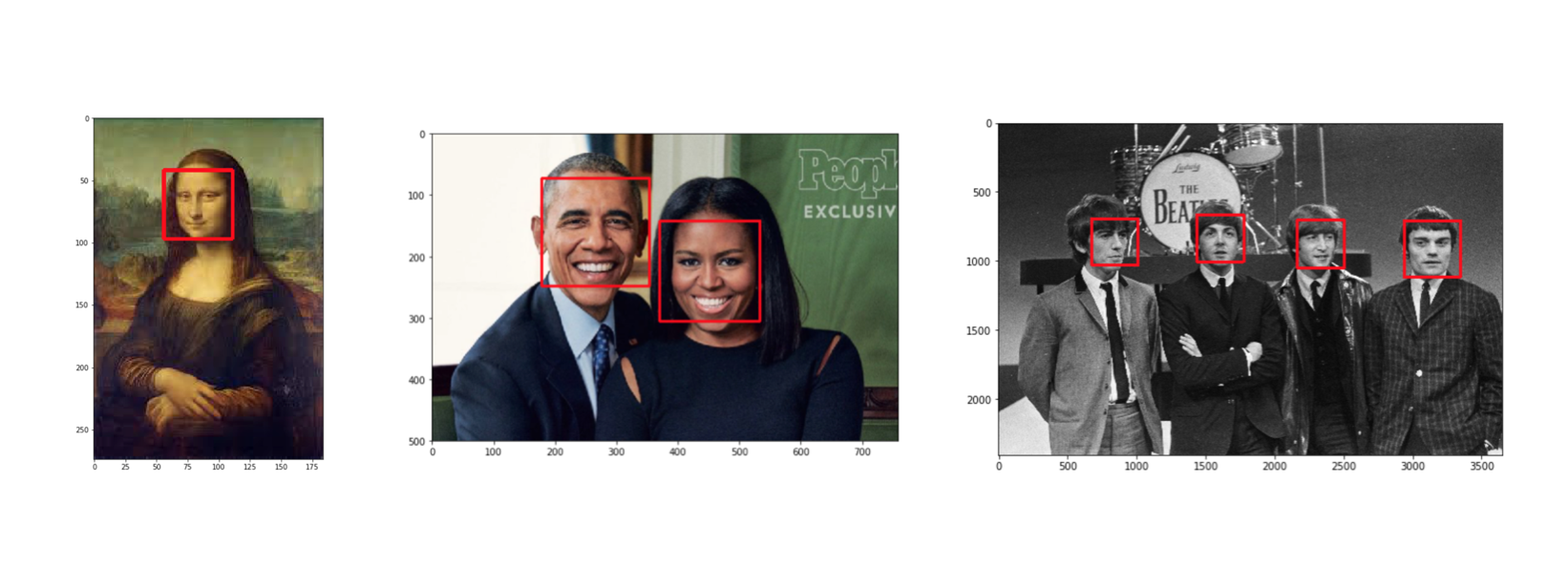

In the code below, we loop over each face in the original image and draw a red square on each face (in a copy of the original image, so as not to modify the original). You can even add eye detections as an optional exercise in using Haar detectors.

An example of face detection on a variety of images is shown below.

# load in a haar cascade classifier for detecting frontal faces

face_cascade = cv2.CascadeClassifier('detector_architectures/haarcascade_frontalface_default.xml')

# run the detector

# the output here is an array of detections; the corners of each detection box

# if necessary, modify these parameters until you successfully identify every face in a given image

faces = face_cascade.detectMultiScale(image, 1.2, 2)

# make a copy of the original image to plot detections on

image_with_detections = image.copy()

# loop over the detected faces, mark the image where each face is found

for (x,y,w,h) in faces:

# draw a rectangle around each detected face

# you may also need to change the width of the rectangle drawn depending on image resolution

cv2.rectangle(image_with_detections,(x,y),(x+w,y+h),(255,0,0),3)

fig = plt.figure(figsize=(9,9))

plt.imshow(image_with_detections)

Loading in a trained model¶

Once you have an image to work with (and, again, you can select any image of faces in the images/ directory), the next step is to pre-process that image and feed it into your CNN facial keypoint detector.

First, load your best model by its filename.

import torch

from models import Net

net = Net()

## TODO: load the best saved model parameters (by your path name)

## You'll need to un-comment the line below and add the correct name for *your* saved model

net.load_state_dict(torch.load('saved_models/keypoints_model_v3_2.pt')['state_dict'])

## print out your net and prepare it for testing (uncomment the line below)

net.eval()

Keypoint detection¶

Now, we'll loop over each detected face in an image (again!) only this time, you'll transform those faces in Tensors that your CNN can accept as input images.

TODO: Transform each detected face into an input Tensor¶

You'll need to perform the following steps for each detected face:

- Convert the face from RGB to grayscale

- Normalize the grayscale image so that its color range falls in [0,1] instead of [0,255]

- Rescale the detected face to be the expected square size for your CNN (224x224, suggested)

- Reshape the numpy image into a torch image.

Hint: The sizes of faces detected by a Haar detector and the faces your network has been trained on are of different sizes. If you find that your model is generating keypoints that are too small for a given face, try adding some padding to the detected roi before giving it as input to your model.

You may find it useful to consult to transformation code in data_load.py to help you perform these processing steps.

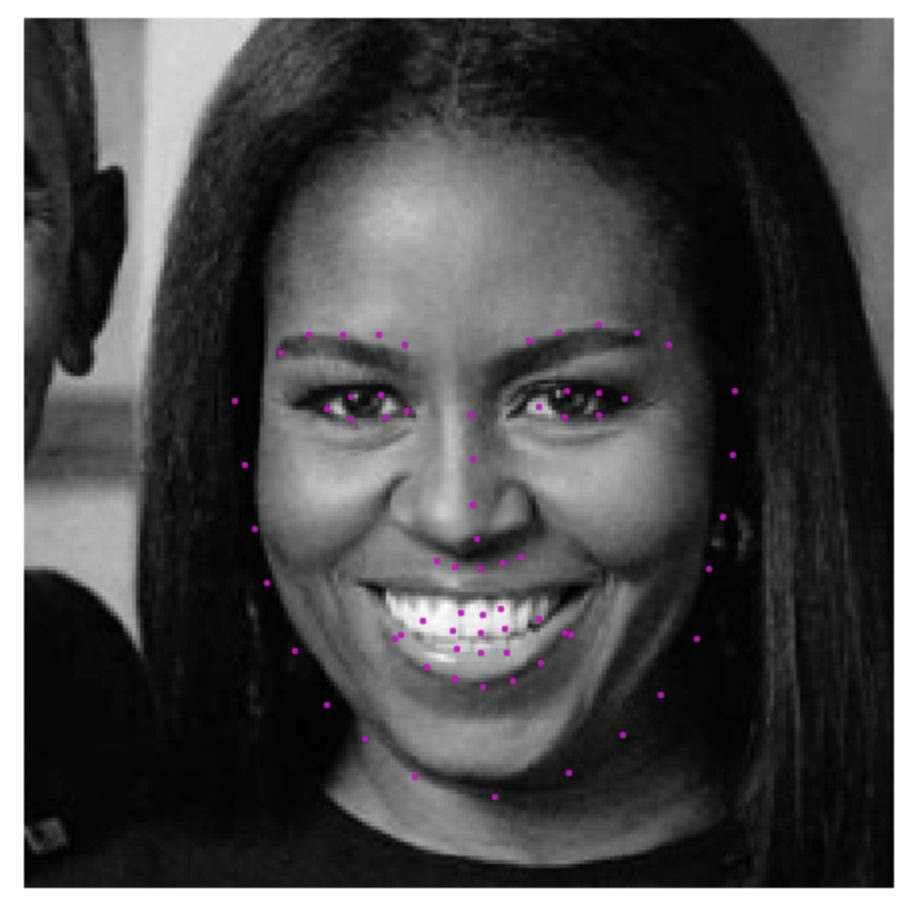

TODO: Detect and display the predicted keypoints¶

After each face has been appropriately converted into an input Tensor for your network to see as input, you can apply your net to each face. The ouput should be the predicted the facial keypoints. These keypoints will need to be "un-normalized" for display, and you may find it helpful to write a helper function like show_keypoints. You should end up with an image like the following with facial keypoints that closely match the facial features on each individual face:

image_copy = np.copy(image)

# loop over the detected faces from your haar cascade

for (x,y,w,h) in faces:

# Select the region of interest that is the face in the image

roi = image_copy[y:y+h, x:x+w]

## TODO: Convert the face region from RGB to grayscale

roi = cv2.cvtColor(roi, cv2.COLOR_RGB2GRAY)

## TODO: Normalize the grayscale image so that its color range falls in [0,1] instead of [0,255]

roi = roi/255.0

## TODO: Rescale the detected face to be the expected square size for your CNN (224x224, suggested)

size = 224

roi = cv2.resize(roi, (size, size))

roi_i = np.copy(roi)

## TODO: Reshape the numpy image shape (H x W x C) into a torch image shape (C x H x W)

roi = torch.Tensor(np.reshape(roi, (1, roi.shape[0], roi.shape[1])))

# print(roi.shape)

roi = torch.unsqueeze(roi, 0)

# print(roi.shape)

## TODO: Make facial keypoint predictions using your loaded, trained network

with torch.no_grad():

key_pts = net(roi)

key_pts = key_pts.view(key_pts.size()[0], 68, -1)[0].data.numpy()

key_pts = key_pts*90.0 + 90

# print(key_pts)

## TODO: Display each detected face and the corresponding keypoints

plt.imshow(roi_i, cmap='gray')

plt.scatter(key_pts[:, 0], key_pts[:, 1], s=20, marker='.', c='m')

#plt.axis('off')

plt.show()