Facial Filters¶

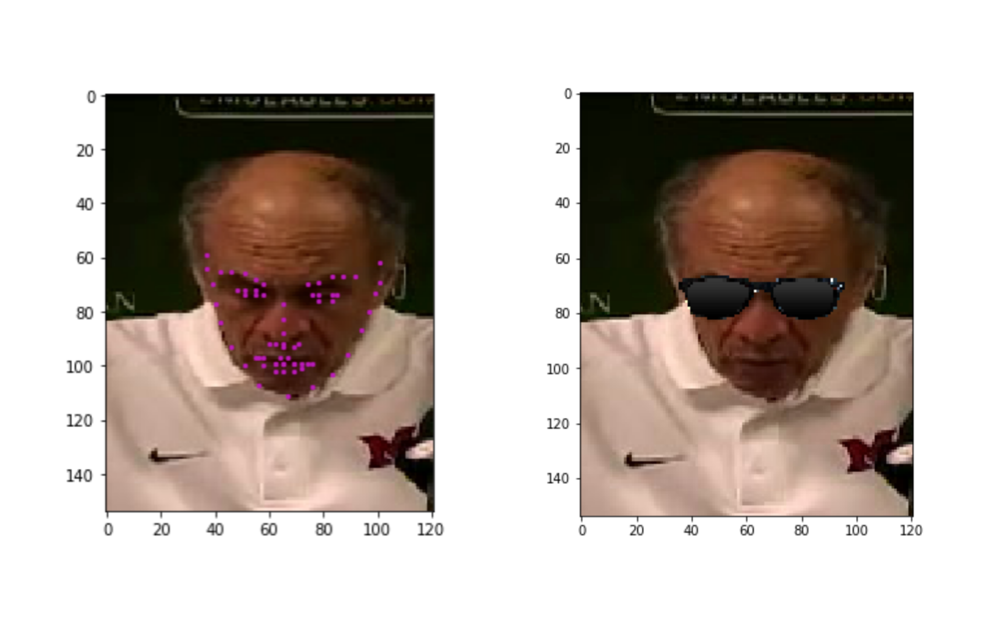

Using your trained facial keypoint detector, you can now do things like add filters to a person's face, automatically. In this optional notebook, you can play around with adding sunglasses to detected face's in an image by using the keypoints detected around a person's eyes. Checkout the images/ directory to see what pther .png's have been provided for you to try, too!

Let's start this process by looking at a sunglasses .png that we'll be working with!

# import necessary resources

import matplotlib.image as mpimg

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import os

import cv2

# load in sunglasses image with cv2 and IMREAD_UNCHANGED

sunglasses = cv2.imread('images/sunglasses.png', cv2.IMREAD_UNCHANGED)

# plot our image

plt.imshow(sunglasses)

# print out its dimensions

print('Image shape: ', sunglasses.shape)

The 4th dimension¶

You'll note that this image actually has 4 color channels, not just 3 as your avg RGB image does. This is due to the flag we set cv2.IMREAD_UNCHANGED, which tells this to read in another color channel.

Alpha channel¶

It has the usual red, blue, and green channels any color image has, and the 4th channel respresents the transparency level of each pixel in the image; this is often called the alpha channel. Here's how the transparency channel works: the lower the value, the more transparent, or see-through, the pixel will become. The lower bound (completely transparent) is zero here, so any pixels set to 0 will not be seen; these look like white background pixels in the image above, but they are actually totally transparent.

This transparent channel allows us to place this rectangular image of sunglasses on an image of a face and still see the face area that is techically covered by the transparentbackground of the sunglasses image!

Let's check out the alpha channel of our sunglasses image in the next Python cell. Because many of the pixels in the background of the image have an alpha value of 0, we'll need to explicitly print out non-zero values if we want to see them.

# print out the sunglasses transparency (alpha) channel

alpha_channel = sunglasses[:,:,3]

print ('The alpha channel looks like this (black pixels = transparent): ')

plt.imshow(alpha_channel, cmap='gray')

# just to double check that there are indeed non-zero values

# let's find and print out every value greater than zero

values = np.where(alpha_channel != 0)

print ('The non-zero values of the alpha channel are: ')

print (values)

Overlaying images¶

This means that when we place this sunglasses image on top of another image, we can use the transparency channel as a filter:

- If the pixels are non-transparent (alpha_channel > 0), overlay them on the new image

Keypoint locations¶

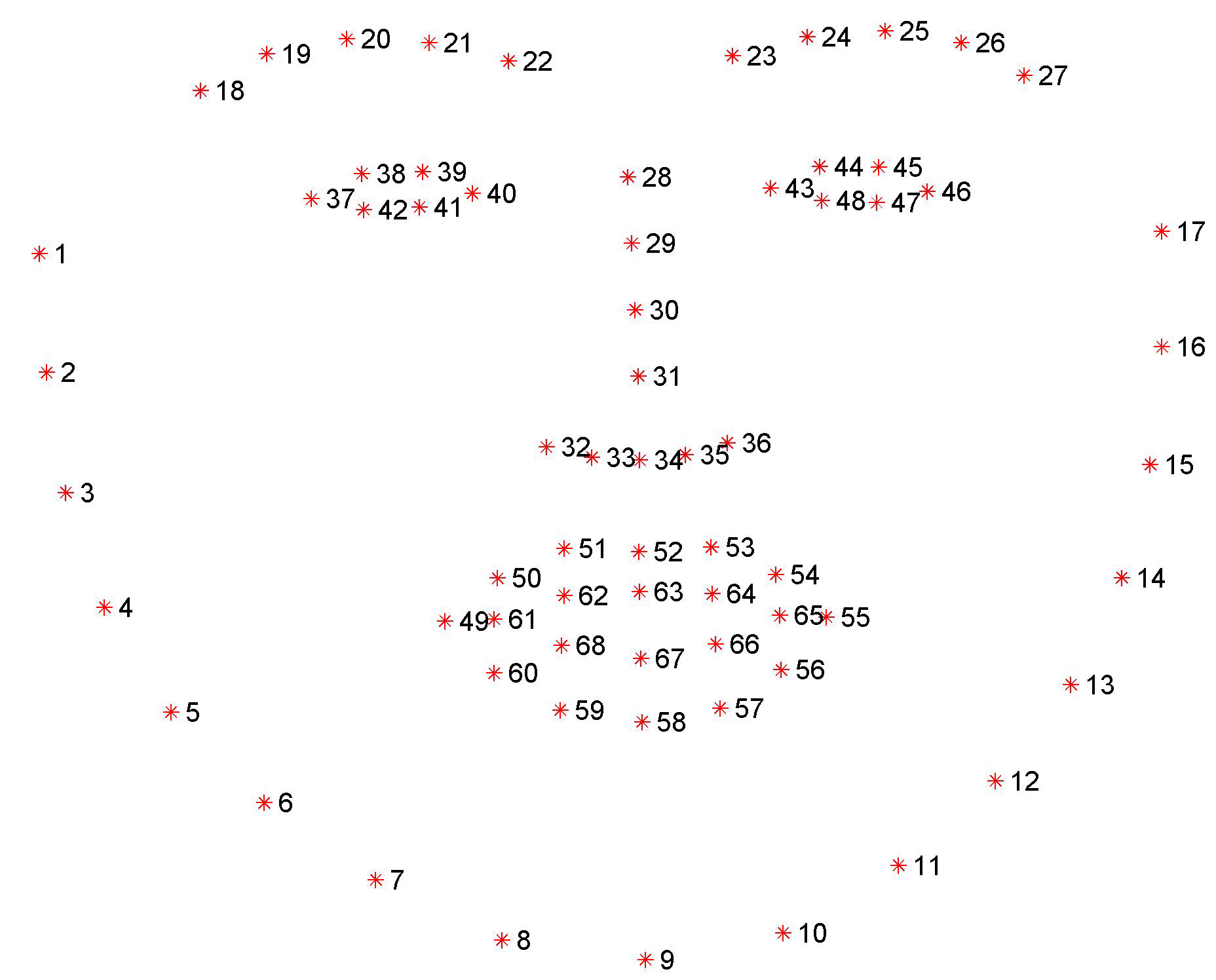

In doing this, it's helpful to understand which keypoint belongs to the eyes, mouth, etc., so in the image below we also print the index of each facial keypoint directly on the image so you can tell which keypoints are for the eyes, eyebrows, etc.,

It may be useful to use keypoints that correspond to the edges of the face to define the width of the sunglasses, and the locations of the eyes to define the placement.

Next, we'll load in an example image. Below, you've been given an image and set of keypoints from the provided training set of data, but you can use your own CNN model to generate keypoints for any image of a face (as in Notebook 3) and go through the same overlay process!

# load in the data if you have not already!

# otherwise, you may comment out this cell

# -- DO NOT CHANGE THIS CELL -- #

!mkdir /data

!wget -P /data/ https://s3.amazonaws.com/video.udacity-data.com/topher/2018/May/5aea1b91_train-test-data/train-test-data.zip

!unzip -n /data/train-test-data.zip -d /data

# load in training data

key_pts_frame = pd.read_csv('/data/training_frames_keypoints.csv')

# print out some stats about the data

print('Number of images: ', key_pts_frame.shape[0])

# helper function to display keypoints

def show_keypoints(image, key_pts):

"""Show image with keypoints"""

plt.imshow(image)

plt.scatter(key_pts[:, 0], key_pts[:, 1], s=20, marker='.', c='m')

# a selected image

n = 120

image_name = key_pts_frame.iloc[n, 0]

image = mpimg.imread(os.path.join('/data/training/', image_name))

key_pts = key_pts_frame.iloc[n, 1:].as_matrix()

key_pts = key_pts.astype('float').reshape(-1, 2)

print('Image name: ', image_name)

plt.figure(figsize=(5, 5))

show_keypoints(image, key_pts)

plt.show()

Next, you'll see an example of placing sunglasses on the person in the loaded image.

Note that the keypoints are numbered off-by-one in the numbered image above, and so key_pts[0,:] corresponds to the first point (1) in the labelled image.

# Display sunglasses on top of the image in the appropriate place

# copy of the face image for overlay

image_copy = np.copy(image)

# top-left location for sunglasses to go

# 17 = edge of left eyebrow

x = int(key_pts[17, 0])

y = int(key_pts[17, 1])

# height and width of sunglasses

# h = length of nose

h = int(abs(key_pts[27,1] - key_pts[34,1]))

# w = left to right eyebrow edges

w = int(abs(key_pts[17,0] - key_pts[26,0]))

# read in sunglasses

sunglasses = cv2.imread('images/sunglasses.png', cv2.IMREAD_UNCHANGED)

# resize sunglasses

new_sunglasses = cv2.resize(sunglasses, (w, h), interpolation = cv2.INTER_CUBIC)

# get region of interest on the face to change

roi_color = image_copy[y:y+h,x:x+w]

# find all non-transparent pts

ind = np.argwhere(new_sunglasses[:,:,3] > 0)

# for each non-transparent point, replace the original image pixel with that of the new_sunglasses

for i in range(3):

roi_color[ind[:,0],ind[:,1],i] = new_sunglasses[ind[:,0],ind[:,1],i]

# set the area of the image to the changed region with sunglasses

image_copy[y:y+h,x:x+w] = roi_color

# display the result!

plt.imshow(image_copy)

Further steps¶

Look in the images/ directory to see other available .png's for overlay! Also, you may notice that the overlay of the sunglasses is not entirely perfect; you're encouraged to play around with the scale of the width and height of the glasses and investigate how to perform image rotation in OpenCV so as to match an overlay with any facial pose.